The black box problem in supply chains

Artificial intelligence is transforming supply chains, but one persistent barrier remains: trust. Many supply chain leaders view AI as a « black box », a system that produces answers without revealing the reasoning behind them. When a shipment’s ETA suddenly shifts, whether due to port congestion, vessel delays, or bad or missing carrier data, planners want to know why. Explainability helps clarify which of these causes is driving the change. And when a disruption is flagged, they need that insight even more. Without clarity, hallucinations can occur, and AI outputs can feel more like guesses than guidance, slowing adoption and limiting value.

This lack of transparency is especially problematic in the supply chain, where operational decisions carry high stakes. If an ETA changes, a planner needs to understand not only the new prediction but also the cause-effect chain that led to it, whether it was a delay in port, vessel schedule updates, or conflicting data from carriers. Without this clarity, AI outputs can feel more like guesses than guidance, which slows adoption and limits value.

Why explainability matters for human planners

Explainability transforms AI from a passive forecasting tool into a collaborative decision-making partner. By showing the logic behind predictions, explainable AI (XAI) enables planners to:

- Validate quickly: If an ETA shifts because of a new carrier schedule, planners can immediately confirm its credibility.

- Adapt confidently: When the root cause of a disruption is clear, teams can redirect inventory, reroute shipments, or adjust staffing with conviction.

- Build organizational trust: Transparent reasoning makes it easier for executives to embrace AI-driven execution, knowing that humans are not abdicating control but enhancing it.

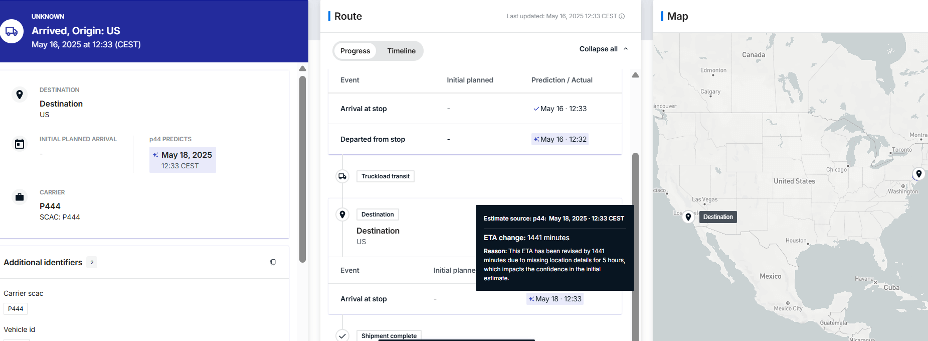

Consider the screenshot below from Project44’s platform. The system not only updates the ETA but also traces the chain of logic. And in this case, missing location details that might affect confidence in the ETA. This explanation gives planners confidence in why the ETA changed and assurance that the system is learning from a large, dynamic set of signals.

From explanation to action: How it works in the platform

Explainability becomes even more powerful when tied directly to the tools planners use every day. At project44, we connect cause-and-effect reasoning with execution workflows that make insights actionable:

- AI Disruption Navigator: When explainable AI highlights a disruption, such as a port delay or weather event, users can immediately see its impact in the Disruption Navigator. This context transforms an abstract ETA change into a concrete event that planners can understand and manage.

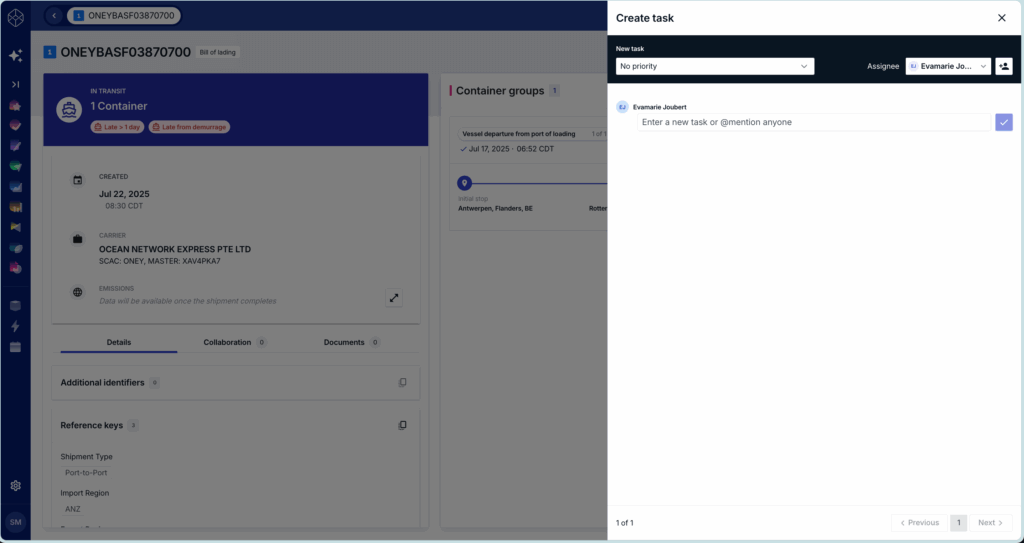

- Task creation: From that same disruption insight, planners can create Tasks directly in the platform, assigning actions, notifying colleagues, or triggering workflows to mitigate the issue. By embedding explainability into action, Project44 ensures disruptions don’t just get noticed, they get addressed.

- Movement homepage: At the executive level, explainability builds trust by connecting disruptions and ETA changes back to business outcomes. The Movement homepage provides a birds-eye view of key business metrics—inventory at risk, customer impact, and service levels—so leaders see not just isolated predictions, but how those predictions shape enterprise performance.

Together, these capabilities tie the why of explainable AI to the what’s next of supply chain execution.

Cause-and-effect transparency as a competitive edge

The difference between an opaque system and an explainable one can determine whether a retailer keeps shelves stocked or misses a critical sales window. Cause-and-effect transparency doesn’t just reduce uncertainty; it becomes a competitive advantage.

For example, when an ETA changes by 12 hours, a planner who understands why can take proactive action, such as adjusting downstream transportation or informing customers before the disruption cascades. Over time, organizations that can harness explainability at scale build more resilient networks, reduce risk exposure, and win customer trust.

Explainable AI also accelerates adoption across the enterprise. Non-technical stakeholders, procurement leaders, operations managers, customer service teams, are more likely to rely on AI outputs when they can see the logic. In effect, explainability becomes the bridge that transforms advanced algorithms into business outcomes.

project44’s vision: Trustworthy AI for supply Chain execution

At project44, we believe that visibility alone is not enough; the future belongs to explainable, actionable visibility. Our vision of AI-led execution is rooted in three pillars:

- Transparency: Every prediction should be accompanied by the cause-and-effect reasoning behind it.

- Trust: By processing and contextualizing massive datasets—from vessel schedules to location updates—AI builds confidence through consistency and clarity.

- Agility: When disruptions happen, explainable AI empowers human planners to act quickly, without wasting time interpreting opaque models.

This kind of contextual intelligence and actionability is possible because of project44’s unparalleled supply chain dataset, fueled by real-time data from over 1.5 billion shipments and the world’s largest logistics networks. By continuously ingesting and correlating over 7.3 trillion data points, our platform makes it possible not just to predict, but to explain predictions.

Our engineering team is advancing capabilities that allow for real-time processing of event streams on a global scale. The complexity is immense, schedules, delays, weather events, customs holds, and more, but the outcome is simple: a system that can tell you not just what will happen, but why. And because these insights tie directly into platform features like Disruption Navigator, Tasks, and the Movement homepage, they ensure immediate, coordinated action across the enterprise.

The road ahead: From insight to impact

The future of supply chains will not be defined by AI models alone, but by explainable AI that inspires confidence and accelerates adoption. Here’s what we see coming next:

- Risk reduction through clarity: Explainability helps organizations not only anticipate disruptions but quantify their causes, making mitigation strategies more effective.

- Resilience through learning: Transparent AI enables supply chains to adapt continuously, learning from cause-effect feedback loops.

- Customer trust as a differentiator: When companies can proactively explain shipment delays to customers, with clear reasoning, they don’t just manage expectations, they build loyalty.

At project44, we are building the foundation for this future. By combining scale, transparency, and explainability, we are redefining what AI-led execution means for the supply chain industry.

Conclusion: The Call to Action

When predictions are opaque, trust erodes. And in today’s environment, where missed ETAs can cascade into lost revenue and customer churn, that gap is unacceptable.

Explainability closes that gap. It replaces guesswork with clarity. It aligns operations with customer service, and executive teams around a shared understanding of what’s happening, and why. And when embedded directly into supply chain workflows, it turns insights into immediate, coordinated action.

This is what sets project44 apart. Our AI doesn’t just generate predictions; it reveals the logic and impact of every decision, enabling improved outcomes and turning every disruption into an opportunity to respond better and faster.

This is the turning point, from reactive logistics to intelligent execution. The leaders of tomorrow will be those who don’t just see what’s coming but understand it and move decisively because of it.